Hope and risk: The rise of generative AI in society

Generative AI has become a captivating phenomenon worldwide, fuelled by the recent success of ChatGPT, a text-generation chatbot. In fact, our latest research reveals that 67% of consumers globally are now familiar with generative AI technologies, and in certain markets like Singapore, nearly half (45%) already have experience in utilising this technology.

Generative AI has already revolutionised various fields, unlocking new possibilities and transforming traditional practices. But along with its promising potential, significant risks also emerge.

The era of low-cost, high-impact misinformation

In the past, orchestrating large-scale disinformation campaigns demanded significant resources and manpower. Coordinated efforts from multiple individuals were required for effective operations. However, the emergence of generative AI has fundamentally transformed the landscape, making it easier and more affordable than ever to create and disseminate compelling fake news stories, social media posts, and other forms of disinformation. These powerful AI systems have reached a level of sophistication where the content they generate is almost indistinguishable from human-created material, blurring the lines between what is true and what isn’t.

Nowadays, a single malicious actor armed with a large language model can exploit the capabilities of generative AI to fabricate a false story that convincingly looks and reads like a legitimate news article. They can even include quotes from fictional sources and construct a compelling narrative that resonates with unsuspecting readers. Leveraging the speed and connectivity of social media platforms, this type of disinformation can rapidly spread and reach millions of people within a matter of hours.

The dissemination of disinformation becomes even more pervasive when social media platforms lack robust identity verification checks during the account creation stage. In such cases, numerous fake accounts can easily be opened, serving as amplifiers for spreading disinformation at an unprecedented scale. This absence of stringent verification procedures not only facilitates the proliferation of false narratives but also exacerbates the challenges faced by individuals in detecting and discerning the authenticity of the information they encounter online.

Exploiting the Potential of Generative AI

Generative AI could not only fuel disinformation but also ushers in new avenues for fraud and social engineering scams. In the past, scammers typically relied on pre-made scripted responses or rudimentary chatbots to engage with their victims. These scripted interactions often lacked relevance to specific queries, making it easier for potential victims to detect the fraudulent nature of the interaction. However, with the advancements in generative AI technology, scammers now have the ability to programme chatbots that mimic human interaction with remarkable accuracy.

Leveraging large language models, these AI-powered chatbots can analyse the messages they receive, understand the context of the conversation, and generate human-like responses that are devoid of the tell-tale signs associated with older chat scams. This represents a significant departure from previous fraud techniques, enabling fraudsters to extort information from their victims far more effectively and convincingly.

These AI-driven fraudsters can exploit the trust and vulnerability of individuals by impersonating someone known personally or publicly too. Our research indicates that over half of global consumers (55%) are aware that AI can be utilised to create audio deepfakes, imitating the voices of people they know personally or publicly, with the aim of tricking them into divulging sensitive information or providing financial resources. Yet, despite this knowledge, in 2022, these scams defrauded over $11 million from victims in the U.S.

Harnessing AI for Defence

Despite the risks posed by generative AI, AI can also be used as a defence mechanism against these threats.

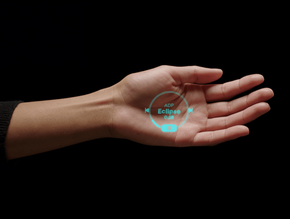

One effective approach is to employ AI for identity verification and authentication. Social media platforms and online service providers can implement multimodal biometrics, which combine multiple forms of biometric data such as voice or iris detection, with machine learning algorithms. This integration improves the accuracy of identity verification and adds an extra layer of security. Furthermore, multimodal biometric systems incorporate liveness detection, which helps identify and flag accounts that are created using face-morphs and deepfakes.

While it is challenging to prevent individuals from falling victim to generative AI-fuelled scams that convince them to provide personal information, AI-powered solutions can mitigate the subsequent misuse of that stolen data. For instance, if personal information is compromised via one of these advanced scams, then used in an attempt to access existing online accounts or create fraudulent ones, online providers equipped with multimodal biometric-based verification or authentication systems can detect and prevent it, meaning it goes no further. By requiring biometric verification, these systems add an additional barrier that scammers cannot easily bypass.

Luckily, our research proves that consumers globally understand the value of biometric identity verification, with 80% saying it is needed when accessing financial services accounts online.

The rise of generative AI has not only revolutionised fields such as content creation and automation but has also introduced significant risks in the form of disinformation and fraud. However, it is essential to approach this technology with a balanced perspective. While acknowledging the potential harms, we must also recognise the promise of AI itself as a tool to combat these threats. By leveraging AI-powered solutions for identity verification and authentication, we can enhance trust and confidence in navigating the digital world while mitigating the risks posed by generative AI.